本系列文章配套代码获取有以下两种途径:

-

通过百度网盘获取:

链接:https://pan.baidu.com/s/1XuxKa9_G00NznvSK0cr5qw?pwd=mnsj提取码:mnsj

-

前往GitHub获取:

https://github.com/returu/PyTorch【深度学习(PyTorch篇)】32.使用卷积神经网络完成图像分类任务(CIFAR-10数据集)

具体代码如下:

import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import DataLoader

from torchvision import datasets, transforms

from torchvision.utils import make_grid

import torch.nn.functional as F

import visdom

# 设置设备

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# 定义模型

class Net(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.bn1 = nn.BatchNorm2d(6)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.bn2 = nn.BatchNorm2d(16)

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(nn.functional.relu(self.bn1(self.conv1(x))))

x = self.pool(nn.functional.relu(self.bn2(self.conv2(x))))

x = x.view(-1, 16 * 5 * 5)

x = nn.functional.relu(self.fc1(x))

x = nn.functional.relu(self.fc2(x))

x = self.fc3(x)

return x

# 初始化模型和优化器

net = Net().to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

# 数据预处理

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.4915,0.4823,0.4468),(0.2470,0.2435,0.2616))])

# 加载数据集

trainset = datasets.CIFAR10('./data/' , train=True , download=True,transform=transform)

train_loader = DataLoader(trainset , batch_size=64 , shuffle=True)

testset = datasets.CIFAR10('./data/' , train=False , download=True,transform=transform)

test_loader = DataLoader(testset , batch_size=64 , shuffle=True)

# 训练网络

for epoch in range(10): # 多次遍历整个数据集

running_loss = 0.0

correct = 0

total = 0

for i, data in enumerate(train_loader, 0):

# 获取输入数据

inputs, labels = data[0].to(device), data[1].to(device)

# 清零梯度缓存

optimizer.zero_grad()

# 前向传播,后向传播,优化

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# 输出统计信息

# 累计损失和准确率

running_loss += loss.item()

_, predicted = torch.max(outputs, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

# 每200个mini-batches输出一次统计信息

if (i+1) % 200 == 0:

train_acc = 100 * correct / total

print(f'[{epoch + 1}, {i + 1}] loss: {(running_loss / 200):.3f}, train acc: {train_acc:.2f}')

running_loss = 0.0

correct = 0

total = 0

print('Finished Training')

# 测试网络

correct = 0

total = 0

loss_sum = 0.0

with torch.no_grad():

for data in test_loader:

images, labels = data[0].to(device), data[1].to(device)

outputs = net(images)

loss = criterion(outputs, labels) # 计算损失

loss_sum += loss.item() # 累加损失值

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

# 计算平均损失

average_loss = loss_sum / len(test_loader)

print(f'Accuracy of the network on the 10000 test images: {100 * correct / total}%')

print(f'Average loss on the 10000 test images: {average_loss}')

本次将在上述构建的简单的卷积神经网络基础上,分别使用TensorBoard和Visodm来可视化以下内容:

-

训练过程中的损失和准确率; -

各层权重参数的直方图; -

第一个卷积层的输出特征图。

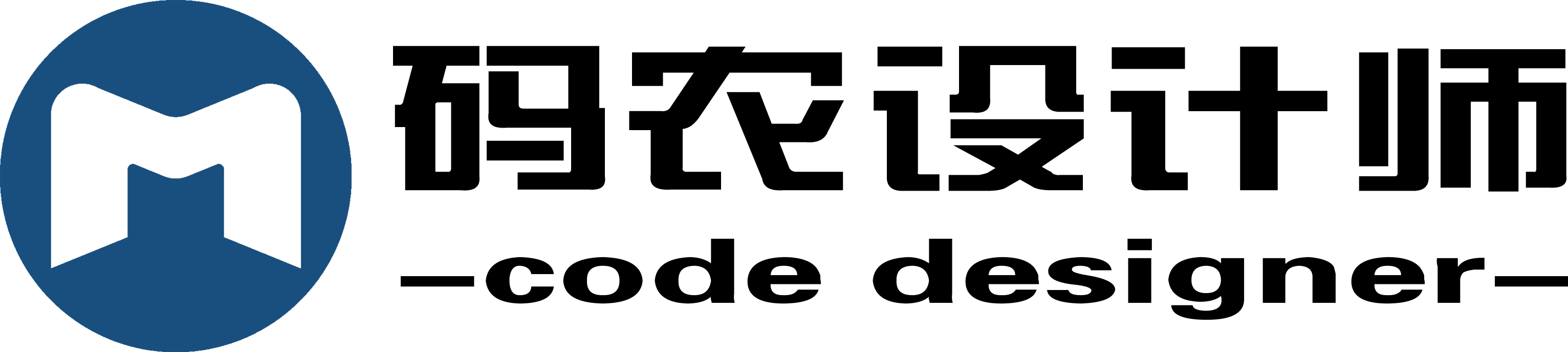

TensorBoard:

# 设置设备

# ... 代码保持不变 ...

# 初始化TensorBoard的SummaryWriter

writer = SummaryWriter(log_dir = 'runs')

# 定义模型

# ... 代码保持不变 ...

# 初始化模型和优化器

# ... 代码保持不变 ...

# 数据预处理

# ... 代码保持不变 ...

# 加载数据集

# ... 代码保持不变 ...

# 训练网络

for epoch in range(10): # 多次遍历整个数据集

# ... 代码保持不变 ...

# 每200个mini-batches输出一次统计信息 + 更新一次Visdom

if (i+1) % 200 == 0:

train_acc = 100 * correct / total

print(f'[{epoch + 1}, {i + 1}] loss: {(running_loss / 200):.3f}, train acc: {train_acc:.2f}')

# 更新TensorBoard的损失和准确率

writer.add_scalar('Loss/train', running_loss / 200, epoch * len(train_loader) + i + 1)

writer.add_scalar('Accuracy/train', train_acc, epoch * len(train_loader) + i + 1)

running_loss = 0.0

correct = 0

total = 0

# 关闭TensorBoard的SummaryWriter

writer.close()

print('Finished Training')

# 各层权重参数直方图

for name, param in net.named_parameters():

if 'weight' in name:

writer.add_histogram(f'{name} Weights' , param.data.cpu().numpy().flatten())

# 测试网络

# ... 代码保持不变 ...

# 可视化第一个卷积层的输出特征图

dataiter = iter(train_loader)

images, labels = next(dataiter)

images = images.to(device)

features = net.conv1(images) # torch.Size([64, 6, 28, 28])

writer.add_images('Features' , features.cpu()[:,:1,:,:])

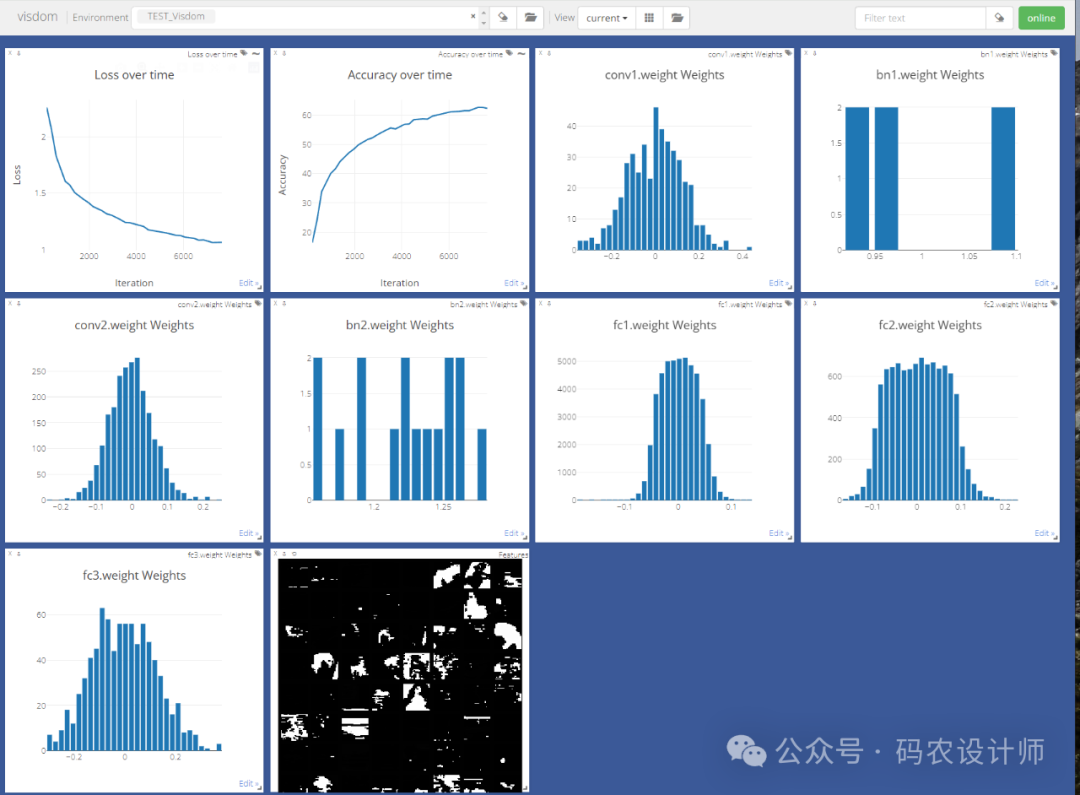

Visdom:

# 设置设备

# ... 代码保持不变 ...

# 初始化Visdom

vis = visdom.Visdom(env='TEST_Visdom')

# 定义模型

# ... 代码保持不变 ...

# 初始化模型和优化器

# ... 代码保持不变 ...

# 数据预处理

# ... 代码保持不变 ...

# 加载数据集

# ... 代码保持不变 ...

# 训练网络

for epoch in range(10): # 多次遍历整个数据集

# ... 代码保持不变 ...

# 每200个mini-batches输出一次统计信息 + 更新一次Visdom

if (i+1) % 200 == 0:

train_acc = 100 * correct / total

print(f'[{epoch + 1}, {i + 1}] loss: {(running_loss / 200):.3f}, train acc: {train_acc:.2f}')

# 更新Visdom的损失和准确率图表

vis.line(X=np.array([epoch * len(train_loader) + i + 1]),

Y=np.array([(running_loss / 200)]),

win='window_loss',

update='append',

opts=dict(title='Loss over time', xlabel='Iteration', ylabel='Loss'))

vis.line(X=np.array([epoch * len(train_loader) + i + 1]),

Y=np.array([train_acc]),

win='window_acc',

update='append',

opts=dict(title='Accuracy over time', xlabel='Iteration', ylabel='Accuracy'))

running_loss = 0.0

correct = 0

total = 0

print('Finished Training')

# 各层权重参数直方图

for name, param in net.named_parameters():

if 'weight' in name:

vis.histogram(param.data.cpu().numpy().flatten(), opts=dict(title=f'{name} Weights'))

# 测试网络

# ... 代码保持不变 ...

# 可视化第一个卷积层的输出特征图

dataiter = iter(train_loader)

images, labels = next(dataiter)

images = images.to(device)

features = net.conv1(images) # torch.Size([64, 6, 28, 28])

vis.images(features.cpu()[:,:1,:,:], nrow=8,opts=dict(title=f'Features'))

可视化结果如下所示:

更多内容可以前往官网查看:

https://pytorch.org/

本篇文章来源于微信公众号: 码农设计师